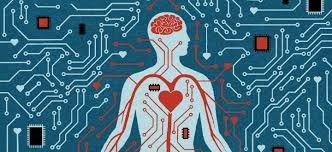

The ethics of deep learning face a critical tipping point as AI systems increasingly shape everyday decisions in your life. When Amazon’s AI recruiting tool discriminated against women by downgrading resumes that mentioned “women,” it wasn’t a mere technical glitch but a wake-up call about hidden biases lurking within seemingly objective algorithms. You might assume these powerful systems operate fairly, but the reality paints a different picture.

In fact, AI ethics extends far beyond isolated incidents. Bias in deep learning often emerges from training data that reflects historical prejudices, leading to discriminatory outcomes that affect real people, particularly those from underrepresented or marginalised groups. This raises serious questions about fairness in deep learning systems and how to ensure they serve everyone equally. As these technologies become more embedded in healthcare, criminal justice, and finance, your understanding of these ethical challenges becomes crucial. Whether you’re pursuing an IISc deep learning course or considering a deep learning certification, grasping the ethical dimensions of AI will be as essential as the technical skills themselves.

The roots of bias in deep learning systems

Deep learning systems learn their patterns from the data they consume. Much like a child absorbs values from their environment, these powerful systems mirror the information they’re trained on. The critical difference, however, lies in their inability to question what they’ve learned. They simply reflect patterns without moral judgement.

Bias in deep learning primarily stems from several interconnected sources, including:

- Allowing the AI system train itself, as self training often contains embedded historical prejudices and societal inequalities.

- The methods used to collect and process data introduce additional layers of bias. Representation bias occurs when certain groups are underrepresented in training samples.

- Beyond the data, algorithm design itself can introduce bias. The architecture choices, feature selection, and optimisation functions all influence how a model interprets information.

- Human decisions throughout the development process additionally introduce cognitive biases. From data labelling to model evaluation, developers’ unconscious assumptions shape AI systems in subtle ways. As you might learn in an IISc deep learning course, these human factors are often the most difficult biases to identify and correct.

Real-world consequences of unchecked bias

Unchecked bias in deep learning manifests in troubling real-world scenarios that affect everyday lives. Consider Amazon’s AI recruiting tool, which systematically downgraded resumes containing the word “women’s” and even penalised graduates from all-women’s colleges. The company eventually disbanded the project after realising its machine learning specialists had created a system that essentially taught itself that male candidates were preferable.

Credit scoring systems face similar challenges, where algorithmic bias leads to unfair lending decisions. When deep learning models reflect historical prejudices, they deny individuals from certain demographic backgrounds access to credit or subject them to higher interest rates. These financial gatekeeping mechanisms perpetuate broader societal inequities, hindering economic mobility for already marginalised communities.

The consequences extend beyond finance into healthcare, where AI systems trained on limited datasets provide less accurate predictions for underrepresented groups. For instance, algorithms used for breast cancer risk assessment may incorrectly classify black patients as “low risk,” resulting in fewer follow-up scans and potentially more undiagnosed cases. Students pursuing a deep learning certification must understand these life-altering implications when designing healthcare algorithms.

Trust erosion represents another significant consequence. When stakeholders discover bias in AI systems, organisations face reputational damage, customer distrust, and potential legal challenges. As highlighted in any comprehensive deep learning course, the costs include expensive technical fixes, lost productivity, and diminished brand value.

Building ethical and fair deep learning models

Creating ethical deep learning models requires a comprehensive approach that goes beyond mere technical fixes. Transparency stands at the foundation of ethical AI, making the decision-making process understandable to humans. Indeed, this visibility must extend to every facet of AI development, from data sources to algorithmic design.

Fairness-aware machine learning offers practical pathways to mitigate bias through three key approaches. Pre-processing techniques transform potentially unfair data before model training. In-processing methods incorporate fairness constraints directly during the learning phase. Meanwhile, post-processing techniques modify the model’s predictions after training to ensure equitable outcomes. For students pursuing a deep learning certification, understanding these complementary approaches provides essential skills for ethical AI development.

Human oversight remains irreplaceable in ethical AI systems. Specifically, human experts must be able to understand AI capabilities, detect anomalies, interpret outputs, and override decisions when necessary. This human-in-the-loop approach ensures accountability and prevents over-reliance on automated systems.

Data diversity forms another critical pillar of ethical deep learning. When facial recognition systems are trained on diverse faces, they better identify specific features across all demographics. Consequently, teams developing these systems should themselves be diverse in skills, ethnicity, race, and gender—a principle often emphasised in any comprehensive IISc deep learning course.

Interpretability tools allow practitioners to explain model decisions, helping to build trust and identify potential biases. Moreover, bias detection tools can identify underperforming groups without requiring sensitive demographic data, enabling ongoing monitoring for fairness.

Moving forward: The ethical imperative

As deep learning continues to shape society, the ethical concerns highlighted throughout this article demand your attention. Hidden biases, far from being mere technical glitches, represent fundamental challenges that affect real people’s lives, opportunities, and well-being.

The consequences of unchecked bias prove too significant to ignore. When AI systems discriminate in hiring, lending, or healthcare, they amplify existing social inequalities rather than reducing them. These systems, though powerful, mirror human prejudices without the moral capacity to question them.

Building ethical AI thus becomes both a technical challenge and a moral obligation. Transparency, diverse datasets, human oversight, and fairness-aware algorithms all contribute to more equitable systems. Though perfect fairness remains elusive, your commitment to ethical principles can significantly reduce harmful biases.